Download

🎧 Podcast Summary

Listen to the narrated summary of the paper:

Abstract

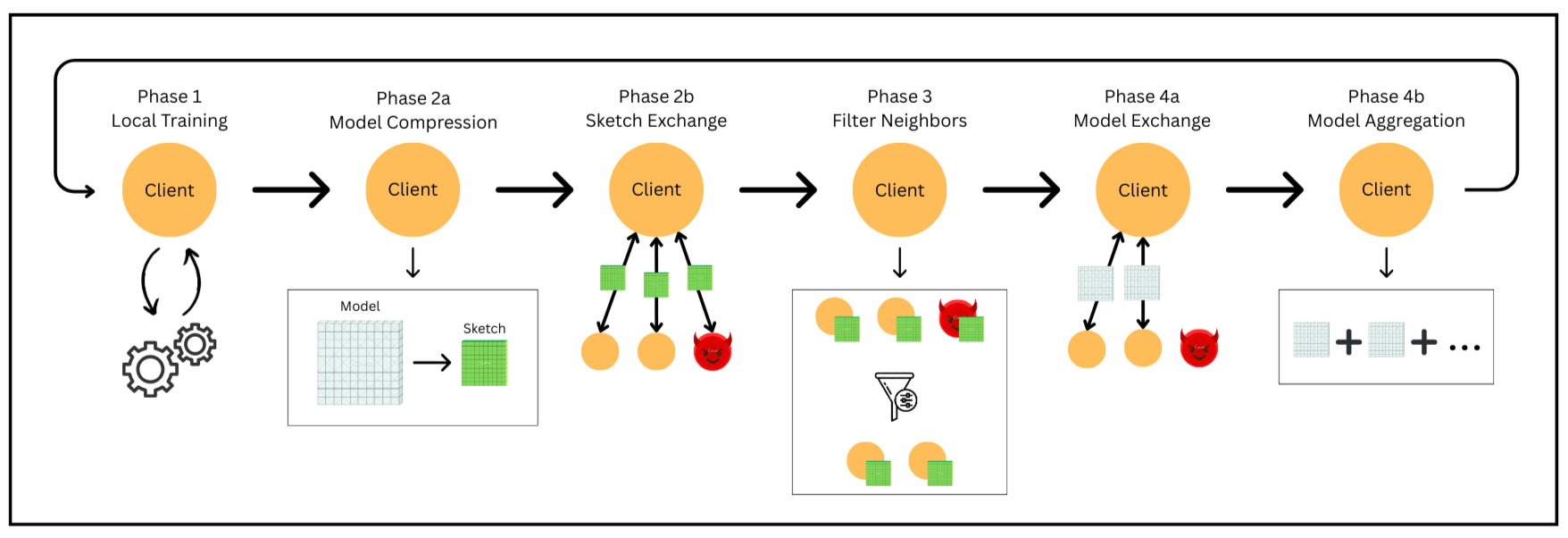

Decentralized Federated Learning (DFL) enables privacy-preserving collaborative training without centralized servers, but remains vulnerable to Byzantine attacks where malicious clients submit corrupted model updates. Existing Byzantine-robust DFL defenses rely on similarity-based neighbor screening that requires every client to exchange and compare complete high-dimensional model vectors with all neighbors in each training round, creating prohibitive communication and computational costs that prevent deployment at web scale. We propose SketchGuard, a general framework that decouples Byzantine filtering from model aggregation through sketch-based neighbor screening. SketchGuard compresses $d$-dimensional models to $k$-dimensional sketches ($k \ll d$) using Count Sketch for similarity comparisons, then selectively fetches full models only from accepted neighbors, reducing per-round communication complexity from $O(d|\mathcal{N}_i|)$ to $O(k|\mathcal{N}_i| + d|\mathcal{S}_i|)$, where $|\mathcal{N}_i|$ is the neighbor count and $|\mathcal{S}_i| \le |\mathcal{N}_i|$ is the accepted neighbor count. We establish rigorous convergence guarantees in both strongly convex and non-convex settings, proving that Count Sketch compression preserves Byzantine resilience with controlled degradation bounds where approximation errors introduce only a $(1+O(\epsilon))$ factor in the effective threshold parameter. Comprehensive experiments across multiple datasets, network topologies, and attack scenarios demonstrate that SketchGuard maintains identical robustness to state-of-the-art methods, with mean test error rate deviation of only up to 0.35 percentage points, while reducing computation time by up to 82% and communication overhead by 50-70% depending on filtering effectiveness, with benefits scaling multiplicatively with model dimensionality and network connectivity. These results establish the viability of sketch-based compression as a fundamental enabler of robust DFL at web scale.

Figure 1: The SketchGuard Protocol

Citation

@MISC{rangwala2025sketchguard,

title={SketchGuard: Scaling Byzantine-Robust Decentralized Federated Learning via Sketch-Based Screening},

author={Murtaza Rangwala and Farag Azzedin and Richard O. Sinnott and Rajkumar Buyya},

year={2025},

eprint={2510.07922},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2510.07922},

}